The Founder's AI Reading List: Key Papers from Training Data

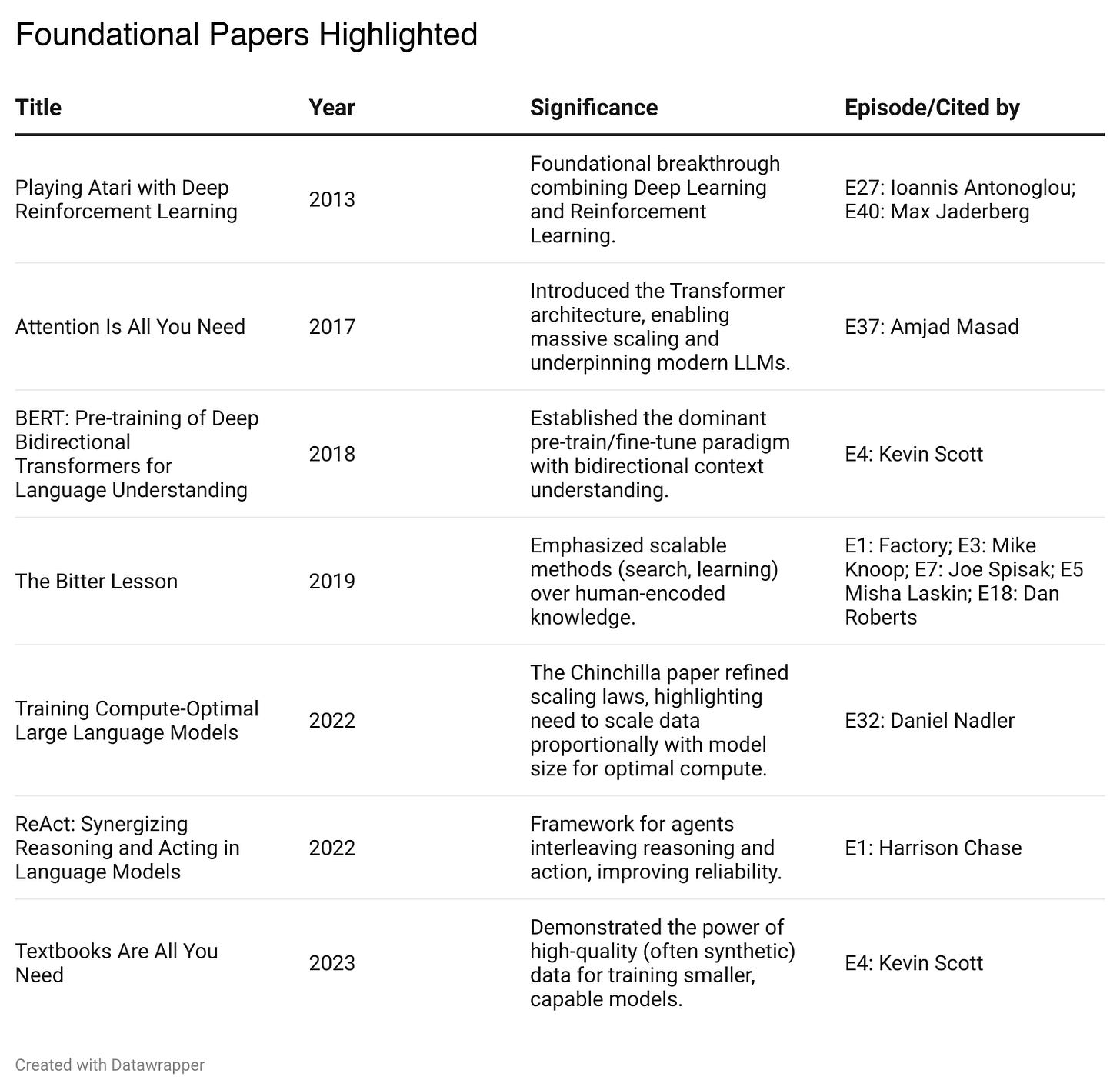

Here are the most important papers that Training Data guests have discussed and a list of all the papers. Enjoy the information!

Post Methodology: Gemini 2.5 Pro with the Deep Research prompt: using links from [training data podcast episode pages] look at all of the research papers cited in the "mentioned in this episode" section of each transcript. make one list of all of the papers. make another of the papers cited more than once (eg The Bitter Lesson). then do an analysis of why these papers are significant for contemporary AI founders. The report was reworked in Gemini Canvas to make more concise and then hand edited and reformatted for the Substack editor.This report reviews key research papers cited by leading AI builders featured on Sequoia’s "Training Data" podcast. Familiarizing yourself with this research can inform decisions about technology, product, data, and competitive positioning.

The podcast features conversations with guests from organizations like OpenAI, Google, Microsoft, Databricks, Nvidia, and many high-growth startups. The research papers mentioned reflect concepts these leaders consider significant for founders, bridging technical research and strategic application. By understanding the lineage of ideas—from deep reinforcement learning and attention mechanisms to scaling laws and data quality—founders can better anticipate challenges and identify opportunities.

Early work like applying NLP to code and Deep Q-Networks in games laid crucial groundwork. However, the acceleration post-2017, driven by the Transformer architecture, emphasizes large models (Transformers, BERT), scaling principles (Chinchilla), data quality (phi-1), and capable agents (ReAct).

Decoding the Breakthroughs Shaping Today's AI

Understanding the core ideas of key research provides a map for navigating the AI terrain.

Learning to Act: DQN and the Rise of RL Agents (2013)

Before the LLM explosion, DeepMind's 2013 paper "Playing Atari with Deep Reinforcement Learning" marked a seminal moment for reinforcement learning (RL). Deep Q-Networks (DQN) successfully combined deep learning (CNNs) with Q-learning to learn gameplay policies directly from pixel-level screen inputs. This bypassed the need for hand-crafted feature engineering prevalent in prior RL methods.

The impact was transformative: a single agent learned to play multiple Atari games at human or superhuman levels from pixels and scores alone. This demonstrated deep RL's power and generality for learning complex sequential decision-making without explicit rules. DQN proved agents could acquire sophisticated skills autonomously, laying conceptual groundwork for subsequent RL advances and modern AI agents interacting with complex environments. It showed the potential of learning complex behaviors before Transformers enabled large-scale language understanding.

The Transformer Takes Over: "Attention Is All You Need" (2017)

The 2017 paper "Attention Is All You Need" introduced the Transformer architecture, fundamentally altering NLP and other AI domains. Its core innovation was relying entirely on "self-attention" mechanisms, ditching the sequential processing of RNNs/LSTMs. This enabled massive parallel processing, overcoming a major scalability bottleneck.

Transformers achieved state-of-the-art machine translation results with less training time, paving the way for much larger models (like BERT and GPT) trained on vast datasets. The architecture's flexibility extends beyond text to vision, drug discovery, and more. For founders, especially in language or generative AI, understanding Transformers is non-negotiable. It underpins recent progress (e.g., AI for code) and dictates choices about model design, compute utilization, and scaling potential. Dispensing with recurrence allowed unprecedented parallelization and unlocked the ability to harness massive computation, aligning with the principle that scalable methods drive progress.

Deeper Understanding Through Bidirectionality: BERT (2018)

Google's "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding" leveraged the Transformer's encoder but introduced the "Masked Language Model" (MLM) objective. Unlike models predicting the next word, MLM masks input tokens and predicts them using both left and right context (bidirectionality). This builds richer contextual representations.

BERT used large-scale unsupervised pre-training followed by task-specific fine-tuning, achieving state-of-the-art results across many NLP benchmarks. It powerfully demonstrated transfer learning via pre-training and solidified the pre-train/fine-tune paradigm. For founders, BERT's legacy is significant for tasks needing nuanced understanding (e.g., enterprise search). Its success stemmed from applying scale to a more effective learning objective (MLM) within a powerful architecture (bidirectional encoders). Founders must assess if their application needs deep contextual understanding (BERT-like) or if generative strengths (decoder-only) suffice. The pre-train/fine-tune strategy remains viable for specialized domains.

The Enduring Relevance of "The Bitter Lesson" (2019)

Richard Sutton's essay "The Bitter Lesson" argues that enduring AI progress comes from general-purpose methods leveraging computation (search and learning), not human-encoded knowledge. While domain expertise offers short-term gains, it often doesn't scale with computation. History (chess, Go, vision, speech) shows methods learning from data eventually win.

The essay provides a framework for modern deep learning's success, prioritizing scalability and systems discovering patterns from data. For founders, it's a crucial strategic mindset: take caution against complex, brittle systems based on human rules; bet on architectures and paradigms benefiting from more data and compute; favor learning-based approaches where possible. The true power lies in methods effectively harnessing computation.General algorithms excel at navigating complex solution spaces. This implies prioritizing scalable infrastructure, data pipelines, and architectures like the Transformer.

Optimizing Scale: Chinchilla Scaling Laws (2022)

DeepMind's "Training Compute-Optimal Large Language Models" (the Chinchilla paper) refined scaling principles. Training over 400 models, the authors found that for optimal compute use, model size (parameters) and training data size (tokens) should scale proportionally. This contradicted the trend of massively increasing model size while keeping data relatively fixed.

Their key finding: many large models were undertrained (too large for their data). Their 70B parameter "Chinchilla" model, trained on 4x the data as the 280B Gopher using the same compute budget, significantly outperformed Gopher, GPT-3, and others. Chinchilla doesn't refute the importance of scale highlighted by Sutton but adds a critical layer of nuance: the allocation of computational resources between model parameters and training tokens is paramount for efficiency. It suggests superior performance might come from smaller models trained on proportionally more data. This impacts compute, data acquisition, and model selection decisions. Founders must analyze this trade-off; investing in more/better data might yield better ROI than simply training a larger model on existing data, potentially leading to more performant and cheaper models.

Agents That Think Before They Act: ReAct (2022)

The 2022 paper "ReAct: Synergizing Reasoning and Acting in Language Models" introduced a framework for more capable and reliable AI agents, building on LLM reasoning and RL's interactive potential. ReAct prompts LLMs to generate interleaved reasoning traces and actions.

Reasoning helps decompose goals, plan, use commonsense, handle exceptions, and identify information needs. Actions allow interaction with external tools (APIs, databases) to gather information or manipulate state. This synergy ("act to reason," "reason to act") improves performance. On tasks like question answering and fact verification, ReAct reduced hallucination by grounding reasoning in retrieved information. Its trajectories are more interpretable. On interactive tasks (simulated household chores, online shopping), ReAct significantly outperformed prior methods that needed far more training data.

ReAct provides a powerful template for founders building the next generation of AI applications—assistants, automation tools, customer service agents—that solve problems using tools in a reasoned manner. This paper was highly influential in shaping the design of many contemporary LLM-based agents that interleave thought and action. It addresses key LLM limits like hallucination and offers a path towards more robust, transparent, and controllable agents, crucial for user trust. (See video overview)

Quality Over Quantity? "Textbooks Are All You Need" (phi-1, 2023)

Microsoft Research's "Textbooks Are All You Need" added data quality to the scaling discussion. Their small 1.3B parameter model, phi-1, was trained on a small dataset (7B tokens) of "textbook quality" data, including filtered web code and synthetically generated Python textbooks/exercises using GPT-3.5. The emphasis was on clear, instructive, self-contained data.

Despite its size, phi-1 achieved remarkable code generation results (HumanEval pass@1 > 50%), surpassing much larger open-source models. A follow-up, phi-1.5, showed similar gains on natural language tasks. This highlighted that exceptional data quality dramatically boosts learning efficiency, enabling smaller models to achieve high performance. For resource-constrained founders, this suggests focusing on curating or synthetically generating high-quality, targeted datasets is a viable path. While Chinchilla stressed scaling quantity with size, phi-1 implies high quality can alter the calculus. It also validates using LLMs for synthetic data generation. Data strategy must encompass quality; creating domain-specific "digital textbooks" could offer competitiveness without brute-force scaling. (See video overview)

Other Notable Contributions & Themes

Code as Data: The early "On the Naturalness of Software" paper justified applying NLP models to code by highlighting its statistical regularities (anticipating Transformers), underpinning tools like GitHub Copilot and AI features in Replit.

Specialized Models: Papers like "Do We Still Need Clinical Language Models?" and "LiGNN" emphasize the value of models trained on specific domain data (medicine, LinkedIn graphs), suggesting high performance in verticals often requires tailoring.

Software Engineering Agents: Mention of "SWE-agent" points towards agents automating coding, debugging, and testing, building on code understanding and agentic frameworks like ReAct.

These themes show that alongside scaling general models, significant value lies in vertical specialization, applying AI to specific domains (code, medicine, law, etc.) often using unique data and expertise.

Strategic Imperatives for AI Founders

The research landscape translates directly into critical strategic decisions:

Architecture Choices: Choose between leveraging large foundation models (GPT-4, Claude, etc.) for speed and breadth, or developing smaller, specialized models for potentially better performance, efficiency, and defensibility on niche tasks, especially with high-quality domain data. Hybrid fine-tuning offers a middle ground. Align the approach with the core value proposition – biggest isn't always best.

Mastering the Data-Compute-Quality Equation: Data strategy is crucial. Balance compute allocation (more compute vs. more data, guided by Chinchilla) with data quality (high-quality curated/synthetic data enabling smaller models, inspired by phi-1). Explore synthetic data generation. Data quality is increasingly a key differentiator.

Capitalizing on the Agentic Shift: Move beyond static models towards dynamic agents that reason, plan, and act using tools (inspired by ReAct). This unlocks new product categories and complex automation. Focus on task decomposition, tool integration, reasoning frameworks, and user experience for reliable, trustworthy agents.

Building Defensibility: Sustainable advantage requires moats beyond just model performance. Focus on:

Proprietary Data & Quality: Unique, high-quality domain datasets or superior quality via curation/synthesis.

Domain Specialization: Deep expertise combined with tailored models.

Unique Workflows & Agentic Systems: Complex, hard-to-replicate systems automating valuable processes.

Network Effects & Flywheels: Classic product-led growth dynamics.

Success demands navigating these trade-offs strategically to create unique, defensible value.

Conclusion: Learning from the Research

The key research papers cited narrate the AI revolution: the foundations of interactive learning (DQN), the Transformer's scale, Chinchilla's optimized scaling, the impact of data quality (phi-1), and the rise of reasoning agents (ReAct). Sutton's "Bitter Lesson" provides enduring strategic perspective.

For founders, engaging with these core principles—attention, scaling dynamics, RL, reasoning-acting paradigms, data quality, scalable methods—is indispensable. They inform critical decisions on architecture, resources, product strategy, and differentiation. Understanding this foundational research provides crucial context and foresight for building impactful, defensible AI companies in a rapidly evolving field.

Appendix: Complete list of papers referenced in Training Data through E40

Foundations of Statistical Natural Language Processing, 1999, E39: Manny Medina

On the Naturalness of Software, 2012, E37: Amjad Masad

Playing Atari with Deep Reinforcement Learning, 2013, E27: Ioannis Antonoglou

Attention Is All You Need, 2017, E37: Amjad Masad

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, 2018, E4: Kevin Scott

Capture the Flag: the emergence of complex cooperative agents, 2019, E40: Max Jadenberg

AlphaStar: Grandmaster level in StarCraft II using multi-agent reinforcement learning, 2019, E40: Max Jadenberg

The Bitter Lesson, 2019, E1: Factory; E3: Mike Knoop; E7: Joe Spisak; E5 Misha Laskin; E18: Dan Roberts

How will artificial intelligence change medical training?, 2021, E32: Daniel Nadler

The Principles of Deep Learning Theory, 2021, E18: Dan Roberts

Training Compute-Optimal Large Language Models, 2022, E32: Daniel Nadler

ReAct: Synergizing Reasoning and Acting in Language Models, 2022, E1: Harrison Chase

LiGNN (Graph Neural Networks at LinkedIn), 2024, E26: Hema Raghavan

Do We Still Need Clinical Language Models?, 2023, E32: Daniel Nadler

Textbooks Are All You Need, 2023, E4: Kevin Scott

Black Holes and the Intelligence Explosion, 2023, E18: Dan Roberts

SWE-agent: Agent-Computer Interfaces Enable Automated Software Engineering, 2024, E1: Harrison Chase

Machines of Loving Grace, 2024, E38: Patrick Hsu

(Note: Some entries are books, articles, or less formal papers. Original Deep Research report did not include episodes 38-40)