Vibe Coding Needs Context Engineering

The future of AI-assisted development depends on structure, not just vibes.

Post Methodology: @NotebookLM: summarize [5 youtube videos]; @Claude 4 Sonnet via Dust: based on [supplied doc] write an essay on the subject "Does Vibe Coding Need Context Engineering?; proposed structure: The promise of vibe coding; The evolution from prompt engineering to context engineering; Comparison of context engineering with the OpenAI spec and Claude Code approaches; description of how context engineering can fulfill the promise of prompt engineering; incorporate [arxiv paper]; incorporate [12-factor agent]; revise for redundancies. Light editing and formatting for the Substack editor.The age of "vibe coding" is coming to an end, and the future belongs to context engineering—at least for professional developers. As AI coding assistants become more sophisticated, the development world is witnessing a fundamental shift from intuitive, trial-and-error approaches to systematic, structured methodologies that actually work at scale.

The promise of vibe coding

Vibe coding emerged as a seductive approach to AI-assisted development—drop in a prompt, get runnable code, and ship. Popularized by Andrej Karpathy, this term represents a new way of programming where developers rely on natural language prompts to generate working code without deep technical knowledge.

Initially, vibe coding delivered on its promise of instant gratification—the "dopamine hit" of watching AI generate working code from minimal input. This "communication first" approach excelled at weekend hacks and prototypes, where there's no existing codebase to integrate with and no edge cases to worry about. The appeal was undeniable: programming with language rather than syntax, relying on intuition and repetition until code seemingly worked.

However, this honeymoon phase revealed a fundamental limitation: intuition does not scale, structure does.

This approach could allow developers to skip learning programming languages entirely, as AI can interpret instructions with a deep understanding of syntax or architecture. For rapid prototyping and low-stakes projects, this approach enables building applications faster by embracing AI-generated code.

But this promise comes with a hidden cost. While vibe coding feels productive in the moment, it often leads to technical debt, security vulnerabilities, and maintenance nightmares. Teams find themselves cleaning up code that looked fine but failed under pressure—a week after shipping, bug reports arrive revealing missing authorization checks, inconsistent naming, and tangled business logic.

As demonstrated in Y Combinator's "How To Get The Most Out Of Vibe Coding" video, a better approach is to make the LLM follow the processes that a good professional software developer would use.

The evolution from prompt engineering to context engineering

The limitations of vibe coding—"context failures" where AI coding assistants miss or lack context entirely— have sparked a crucial evolution in how we approach AI-assisted development. The term "context engineering" has gained prominence through influential voices in the AI community, marking a significant shift from ad-hoc prompting to systematic context management.

The conceptual foundation for context engineering emerged from recognition that context is the critical bottleneck in AI systems. As Dust founder Stan Polu noted on X in April: "So what's the current limiting factor that could be the cause of marginal (that is limited) returns to agentic intelligence? Like any actively evolving system, it's bound to shift with new advancements but currently, we believe it's context. Context is complimentary to agents and required for agents to be used with any relevancy, but it becomes the bottleneck when agents' capabilities are high but context is lacking."

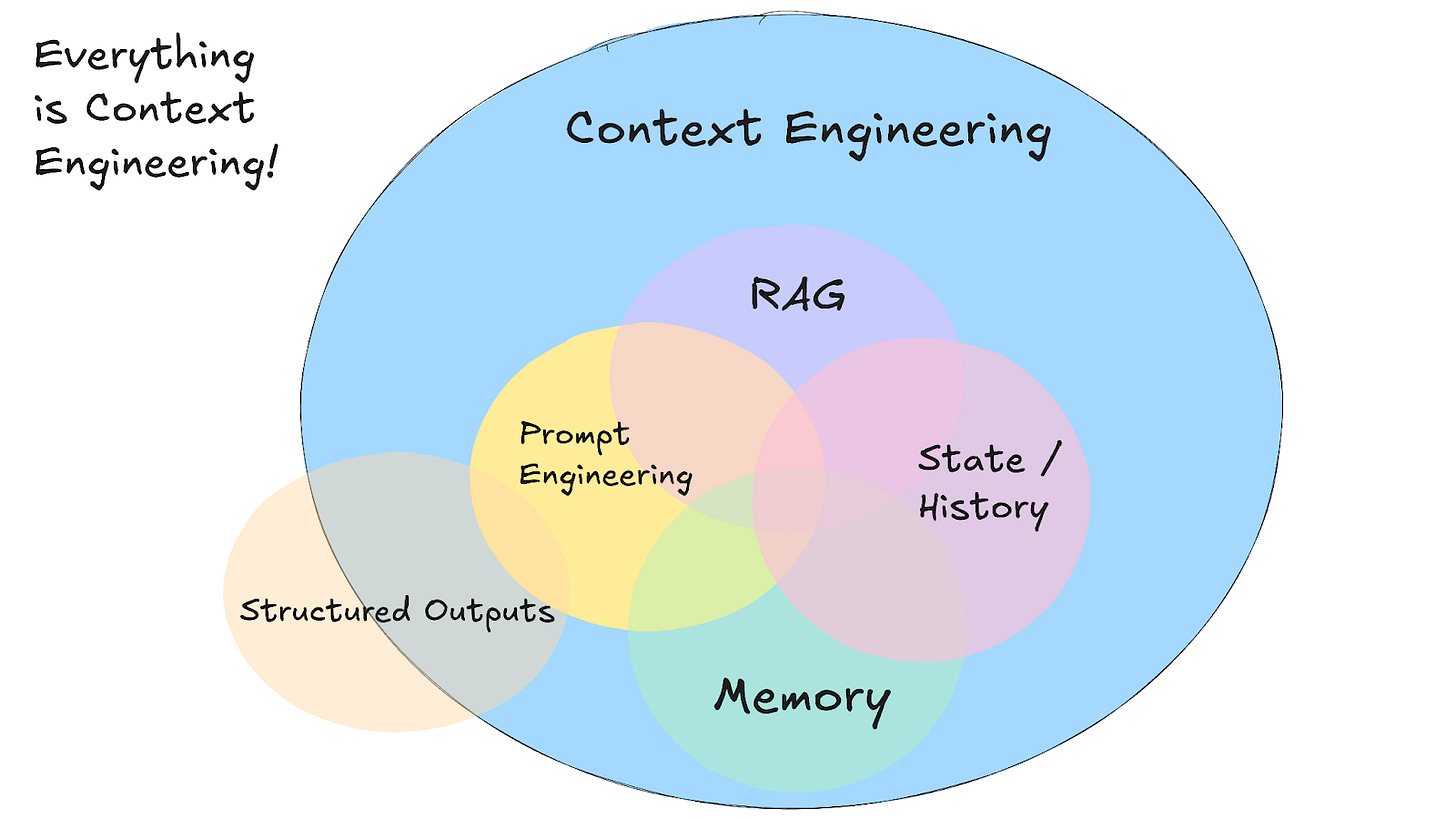

This recognition of context as the fundamental constraint has led to more systematic approaches to managing it. HumanLayer founder Dex Horthy's influential "12 Factor Agents" framework adapts the principles of “The Twelve-Factor App” for AI agent development. His Factor 3, "Own Your Context Window," includes a widely-circulated graphic that shows how context engineering encompasses many aspects of AI coding:

This insight was further crystallized by Shopify founder Tobi Lutke on X in June, who articulated the distinction clearly: "I really like the term 'context engineering' over prompt engineering. It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM."

Andrej Karpathy, who coined "vibe coding," agrees with this more sophisticated concept, representing a fundamental shift from simple, prompt-based interactions to a comprehensive system for providing AI with the information it needs to succeed. He goes on to add, "So context engineering is just one small piece of an emerging thick layer of non-trivial software that coordinates individual LLM calls (and a lot more) into full LLM apps." As explained in LangChain's "Context Engineering for Agents," context engineering is "the art and science of filling the context window with just the right information at each step of an agent's trajectory."

Unlike prompt engineering, which focuses on clever wording and specific phrasing—like giving someone a sticky note—context engineering creates a complete system that includes documentation, examples, rules, patterns, and validation. It's like writing a full screenplay with all the details rather than just hoping the AI will improvise correctly.

Context engineering: The four pillars

As Cole Medin explains in "Context Engineering is the New Vibe Coding," the focus should be on the systematic approach that any effective context engineering implementation must include:

Writing Context: Creating persistent information stores

Selecting Context: Pulling relevant information at the right time

Compressing Context: Managing token bloat through summarization

Isolating Context: Structuring information for effective task performance

How OpenAI and Claude Code approach context engineering

Different AI platforms are taking distinct approaches to implementing context engineering principles, each with unique strengths and methodologies, as detailed in the analysis of Sean Grove's "The New Code: Prompt Engineering is Dead" presentation and Anthropic's "Mastering Claude Code in 30 minutes" video.

OpenAI's Specification-Driven Approach represents a paradigm shift toward treating specifications as the primary artifact of value. Sean Grove's vision emphasizes that the most valuable professional output isn't generated code, but the written specification that captures intent and values. This approach prioritizes human alignment first—ensuring that product, legal, safety, research, and policy teams can collaborate on shared goals through natural language specifications in markdown format.

OpenAI's methodology employs deliberative alignment, using specifications and challenging prompts to train "grader models" that score responses against defined criteria. This score reinforces model weights, effectively embedding policy into the model's "muscle memory" rather than relying on inference-time context. The OpenAI Model Spec serves as a practical example—a living document that unambiguously expresses intentions and values for model behavior.

Claude Code's Agentic Integration Approach focuses on seamless integration with existing development workflows while providing sophisticated context management. As demonstrated in Anthropic's training video, Claude Code operates as a fully agentic assistant designed for building entire features, writing complete functions, and fixing complex bugs simultaneously.

Claude Code's context engineering implementation includes:

CLAUDE.md files that automatically pull into context at session start, containing project-specific rules, bash commands, and coding guidelines

Custom slash commands stored in .claude/commands folders for repeated workflows

Model Context Protocol (MCP) integration enabling secure, two-way connections between data sources and AI-powered tools

Hierarchical configuration managing context, permissions, and tools at project, global, and enterprise levels

Multi-agent workflows where multiple Claude instances work in parallel on different aspects of projects

The Claude Code approach emphasizes context curation over prompt optimization, automatically gathering relevant information from codebases, documentation, and project history to inform AI responses.

The formal discipline: Context engineering at scale

The evolution from vibe coding to structured context engineering reflects a broader transformation that has been formalized in recent academic research. "A Survey of Context Engineering for Large Language Models" establishes Context Engineering as a formal discipline focused on the systematic optimization of information payloads for Large Language Models during inference—moving from the "art" of prompt design to the "science" of information logistics and system optimization.

The survey introduces a comprehensive taxonomy that reveals context engineering's true architectural scope through two integrated layers:

Foundational Components that serve as building blocks:

Context Retrieval and Generation: Acquiring external knowledge and prompt-based content creation

Context Processing: Managing long sequences, self-refinement, and structured information integration

Context Management: Memory hierarchies, compression strategies, and computational optimization

System Implementations that demonstrate enterprise-scale integration:

Retrieval-Augmented Generation (RAG): The modular, agentic architectures we see in production systems

Memory Systems: Persistent interactions enabling the long-term learning capabilities essential for complex development projects

Tool-Integrated Reasoning: The function calling and environmental interaction that powers modern AI coding assistants

Multi-Agent Systems: Coordinated workflows like Claude Code's parallel processing capabilities

The Critical Asymmetry and Future Implications

Most significantly for AI-assisted development, the research identifies a fundamental asymmetry in current model capabilities: while LLMs excel at understanding complex contexts, they show pronounced limitations in generating equally sophisticated, long-form outputs. This asymmetry explains why vibe coding produces impressive demos but fails at sustained, complex development tasks.

This insight suggests that future context engineering will require sophisticated output validation and iterative refinement systems—exactly the systematic approaches that distinguish professional development from casual experimentation. The increasing scope of context engineering capabilities, from simple prompt optimization to memory management and multi-agent coordination, indicates this discipline will become central to all AI system design.

Fulfilling the promise of prompt engineering

Context engineering represents the maturation of prompt engineering into a systematic discipline that actually delivers on the original promise of AI-assisted development. The fundamental shift is treating context—instructions, rules, and documentation—as Andrej says, "an engineered resource requiring careful architecture just like everything else in software."

Systematic Error Reduction: Context engineering minimizes the risk of hallucinations and inaccuracies by providing comprehensive, structured input that reduces ambiguity. Rather than hoping the AI will guess correctly, developers create detailed frameworks that include rules, documentation, examples, and task-specific plans.

Scalability and Consistency: Unlike vibe coding, which breaks down as projects grow complex, context engineering enables AI systems to produce high-quality code that scales effectively in production environments. The structured approach ensures AI follows project patterns and conventions consistently across different features and team members.

Self-Correcting Systems: Context engineering includes validation loops that allow AI to fix its own mistakes. By providing clear success criteria and testing frameworks, the system can iterate until all validations succeed, ensuring working code on the first try.

From Improvisation to Orchestration: The developer's role evolves from writing every line of code to designing the system that writes, checks, and future-proofs it. This shift means thinking beyond individual tasks to consider entire system architecture, codifying rules for AI to follow, and directing AI toward reuse and proactive improvement.

Context engineering transforms AI from a simple code generator into a collaborative partner that understands project context, maintains architectural awareness, and builds with future-readiness in mind. It's the difference between having an AI that can write code and having an AI that can engineer software.

The evidence is clear: while vibe coding may feel good in the moment, context engineering is what actually makes AI coding assistants work reliably. As AI systems become more sophisticated, the discipline of context engineering will become essential for any development team serious about leveraging AI effectively. The future belongs not to developers who can craft the perfect prompt—or vibe code the slickest demo—but to those who can design the perfect context. If the spec is the new code, effective communicators will be the most valuable programmers in an AI-assisted world.